A Message From the CEO

Dear Partner,

A lot of talk about data quality has risen to a roar of late, and some of you have asked me to comment on it, as well as share what L&E Research is doing to ensure you get quality data, today and tomorrow, in order to make the best decisions. I myself have been pondering, as a 30+ year veteran of the industry, whether the market research industry has reached a crossroads. Please read on with your cup of coffee (or your favorite beverage of choice…no judgment here) on what’s going on, and what L&E is doing to raise the bar of data quality.

A Quick Synopsis on Data Quality

Let’s review what has recently transpired as it relates to panel quality.

- . The conclusion was sample is ridden with fraud. Bots (technology created personas that are given credentials to emulate humans) and “fraudsters” (people who are not who they say they are) riddle the sample landscape.

- Last year Dynata, a 2018 merger of SSI and Research Now (creating the largest panel company in terms of both revenue and panel size) declared Chapter 11 and reorganized through the courts, eliminating over $500 MM in debt (I assume I do not have to expound on why the largest panel company in the space declaring bankruptcy is problematic/relational to data quality).

- In the qualitative space, this person (Kimberly Joyful of Paid For Your Say) promises to teach consumers how to plug into the larger #mrx ecosystem to get into more studies that pay. While their website promotes an altruistic outcome, the reality is the leader of this group is a former researcher teaching her now 11k+ audience how to cheat in order to gain access to paid research studies.

- And finally (but lastly??), last week a company in the #mrx space, Opinions 4 Good (also rebranded as Slice), was federally indicted on charges of fraud, making fake data sold as legitimate consumer opinions via the use of “ants” (people creating fake accounts to complete the surveys, with the leadership not only allowing it, but actively enabling and in fact creating it themselves).

While the latest federal fraud case is alarming, in that company leadership knowingly falsified data (if the formal accusation is true), as I have outlined above, the bells have been ringing for quite some time that the #mrx ecosystem has a quality control problem. Everyone says their sample is “high quality.” But how do you know? I wanted to take a moment to demonstrate how some companies collect data, how L&E collects data today, and the engineering we’re undertaking for an even better solution tomorrow.

The Sample Ecosystem

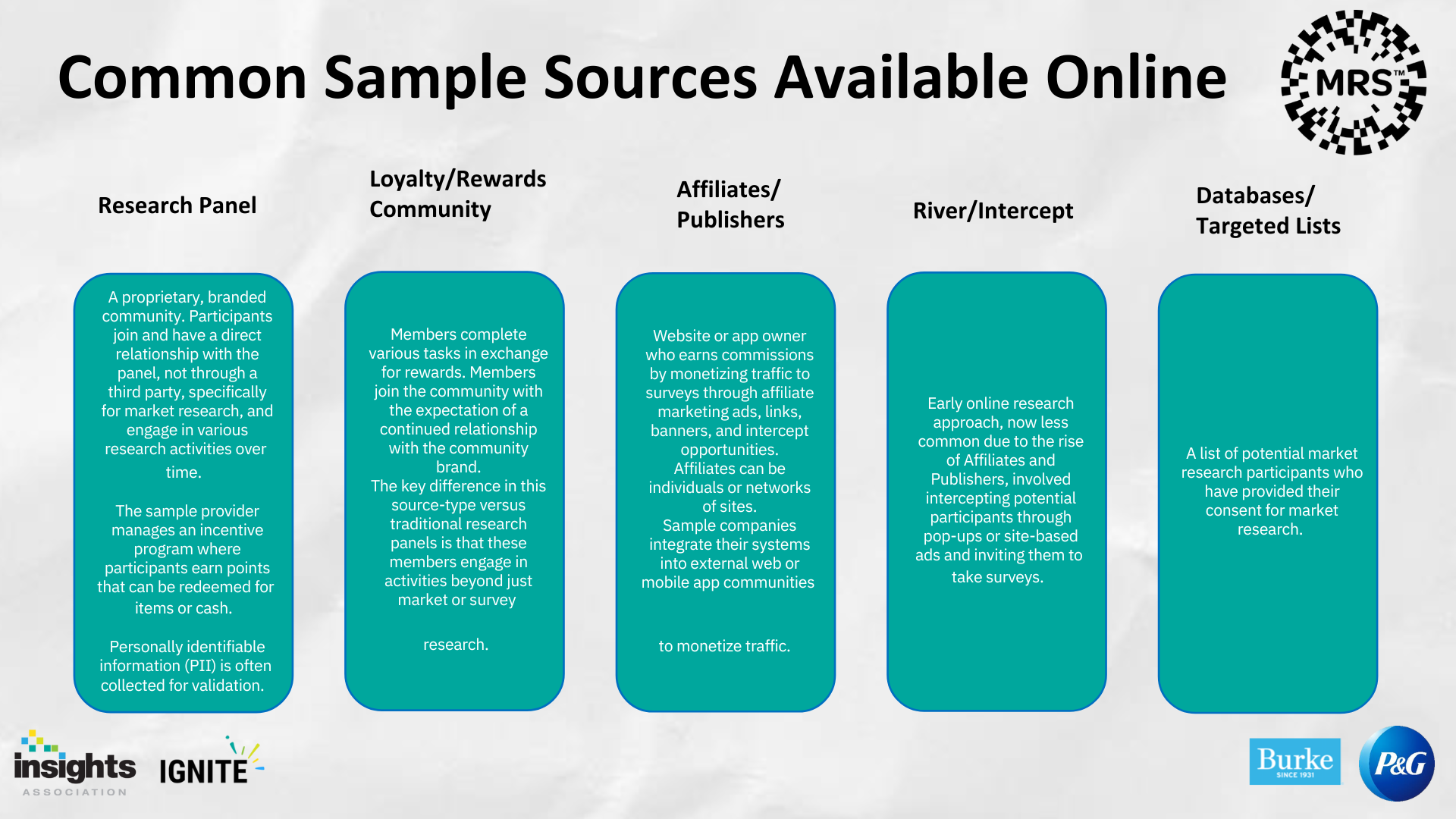

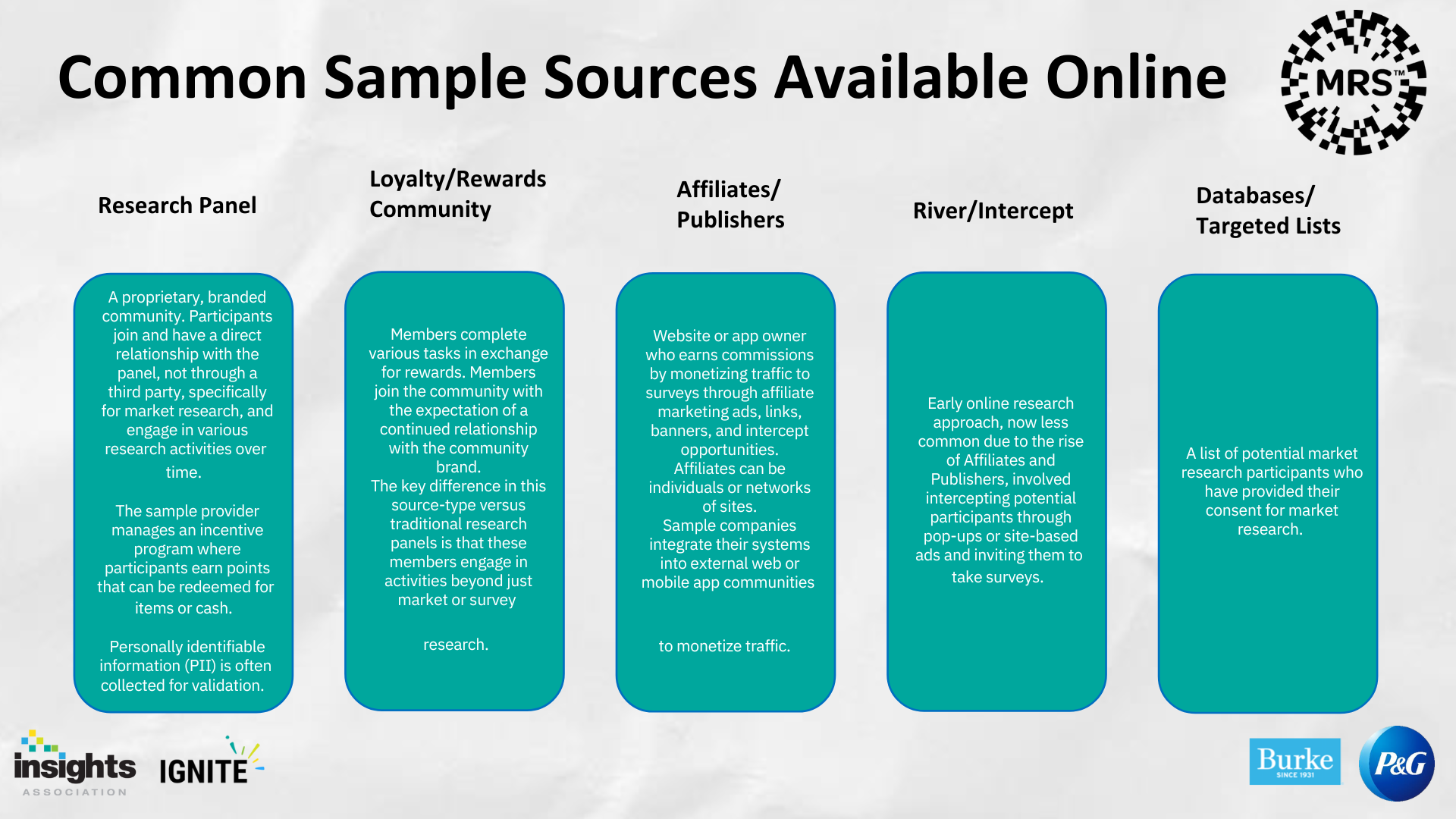

Tia Maurer, Group Scientist at Procter and Gamble and member of the Case4Quality team advocating changes for a better data ecosystem, recently presented at an Insights Association (IA) event on fraud in research, and where sample is obtained by suppliers. I have copied a page from her presentation showing the five typical sample sources

*sample sources provided by The Market Research Society

I regularly use a cooking analogy when I speak with clients about sample and where they obtain it: “Do you care how the sausage is made?” Those clients that care about data quality always say “yes”: knowing how the sausage is made means understanding the origins of your sample, thus in turn knowing where your data is coming from. As everyone knows, the ingredients make the dish. Loyalty/rewards, affiliates/publishers and river/intercept are all tapping into outside communities in hopes they can be converted into a completed survey (these sources are also terrible for qual, as we proved in our research). However, there’s no way of identifying who that person is: the hope is they are who they say they are, and provide authentic feedback. And these sources have very poor response rates (read on for more on that). Databases/targeted lists typically have marginal accuracy (i.e. They usually have 60-80% accuracy of contact information). And like the other sample sources, response rates are low (especially now in the mobile phone era). This is why L&E builds organic panel

How L&E Does It

When speaking with clients, I always start by outlining the fundamentals of the research ecosystem and why we complete our research via our independently owned and operated research panel. Years ago, collecting data was typically conducted via phone call. You may remember the days when we all had a home phone/phone number (some still do, but analysis shows that number is now 27-29%). Now most people use mobile phones, and call screening has intensified, with mobile devices using apps to help identify (and block) callers. As a result of better screening technology (and some would argue over-saturation of surveys/poor surveys), response rates have plummeted. I outline this below to demonstrate the following math equation, which is actual study incidence:

-Accurate number (A; generally, list services would sell 80% accuracy) x someone answering the phone (B; I’ll be generous and estimate 80%) x cooperative HH (C; Pew Research reported 7% response rate in 2017, and getting worse) x qualified for the research and completes the questions (D; let’s say 20%, again a generous figure in many research study cases) x agrees to engage in phase 2 qual (E; let’s say 90%) x is available at the time of phase 2 (F; let’s be generous and say 95%) and then actually completes the research (G; our show rates are 93%, which is high for the industry) = study participation rate.

So, to calculate this: 80% x 80% x 7% x 20% x 90% x 95% x 93% = less than 1 in a hundred (.7% specifically) complete the study.

Less than 1% success is cost prohibitive for most clients; thus, we build organic panel by finding people interested in sharing their information and welcoming our engagements in exchange for participation in research studies. Turning data accuracy (A), response (B) and cooperation (C) into nearly 100% makes our overall completion rate considerably higher. 1.6 million people later and growing…that’s how we solve the sample problem.

The downside of a panel is it attracts bad actors. The industry calls them cheaters and repeaters (people that lie to attempt to get into studies, like the training classes taught by Paid For Your Say). L&E does a lot to weed out these bad actors:

- We check ID’s. Over 90% of our panel has been ID validated. Online or in-person, we require a driver’s license or passport to participate.

- We constantly scrub our panel. Duplicate phone numbers, addresses, email addresses…we’re always ferreting out people that are attempting to game the system, utilizing both technology and full-time staff to “clean” our panel.

- Geofencing: as a company exclusively providing US panel, we firewall out all traffic not within the US (people outside of the US, their device has an IP address that shows their geo-location, unless they use a VPN. As a result, we also block most VPNs, and validate the few VPN accessed accounts that we allow).

- We use a series of steps that require human engagement, resulting in humans verifying the human on the other end (example would be a tech check for online).

As you can see, we do quite a bit to deliver quality sample for our clients’ research. Despite this, we still have fraud issues. When we discovered Paid For Your Say, we found people in our panel in her audience. As a result, we planted a spy in her network to identify as many of her audience as possible (they’re still there in fact, as P4YS hasn’t found us yet!) in our panel, allowing us to quarantine them. We have thousands of accounts we’ve labeled in our systems as fraudulent or “do not call” from a variety of quality control steps like this. There are other examples I could provide on how fraud occurs, and how we combat it. But just like in the financial sector, when the financial opportunity exists, people will try to figure out how to cheat the system (fraud). And continuing to operate in this ecosystem, we’ll always be reacting to those efforts.

What is L&E Adding To Improve Data Quality Even More

I am heartened by the efforts that some in the industry are making, like Case 4 Quality. However, the problems in the sample industry are multi-fold:

- Any ecosystem that promises rewards if you provide the right answers will always encourage dishonesty by people in hopes to earn said rewards.

- Our industry has tossed itself overboard with companies promising they can provide all three elements of the “business triangle”: quality, speed and price. Poor sample is cheap, it is fast…and until recently, quality is not really validated, but always assured by the supplier as good (unlike a bad meal that would make you sick, there was no way to validate bad sample until after the fact).

- Brands often seek low incidence audiences. Panels can track demographics, but behavior and attitude are always changing. To date, the way clients looked to solve this was with innumerous questions to ensure accuracy of the participant. However, this results in a poor experience for the consumer, answering lengthy questionnaires/screeners that rarely meet the brands’ specifications and thus don’t get to do the rewarding part: participation in the qualitative research that pays.

In short, we’ve created an ecosystem that encourages fraudsters (people that will do anything in hopes they get the reward) and discourages the majority of people that just would like to share their opinions (people that answer honestly, but as a result of low incidence research, rarely qualify, thus suffering through a miserable experience).

I am excited to share that L&E has launched, or is launching this month, several initiatives to create a better marketplace where consumers and brands can be connected, for better research outcomes, creating a better experience for both researcher and participant.

»The launch of our mobile app, with RealEyes Verify™ technology that will link facial recognition with a user’s research account.

»Behavioral data collection via the app, including geofence, website surfing and purchase behavior tracking.

» Making all panelist engagements have rewards. We have been testing this in select markets, and the results were overwhelmingly positive. We will be converting our entire panel ecosystem this year to a reward-based experience.

» Launched our self-serve platform, CondUX, enabling researchers to manage the entire research process, with qualitative and quantitative tools to execute.

Through the usage of our app, we will enable a more rewarding experience for the consumer while providing an easily verified (do you share your phone with anyone???) identity solution that also collects behavioral information passively, reducing question fatigue for the participant. When the consumer wins with a better experience, brands will win with better data.

Will your costs go up? Yes, a little. As stated previously, delivering speed, quality and price is not achievable in any industry. But when one considers the negative impacts of bad data on brand decision making, we’re confident paying a little more for high quality sample that can be delivered rapidly, will be game changing for brands. And for the first time, we will be opening up our panel to quantitative research at scale, at competitive costs with traditional quantitative panel solutions.

Close

I hope this letter has proven helpful to you as it relates to the industry, and the initiatives we are taking to create better research outcomes. Brands have begun engaging us, and the industry at large, bringing forth ideas and innovations to make the ecosystem better from the elements they can control (e.g. shorter surveys/screeners). It’s time for the industry to innovate as well. This is our way of delivering better sample, as well as bringing new data solutions to the forefront, to deliver better research results. I’ll be speaking more about this in the coming months…I believe a revolution in market research is underway.

All the best,

Brett